Strategic Resource Allocation for New Technology in Crime Labs: A 2025 Guide for Forensic Leaders

This article provides a comprehensive framework for researchers, scientists, and drug development professionals navigating the complex challenge of integrating new technologies into resource-constrained forensic laboratories.

Strategic Resource Allocation for New Technology in Crime Labs: A 2025 Guide for Forensic Leaders

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals navigating the complex challenge of integrating new technologies into resource-constrained forensic laboratories. It explores the current crisis of evidence backlogs and funding shortfalls, outlines proven methodologies for planning and implementation, offers strategies for troubleshooting optimization and staff retention, and validates approaches through comparative analysis of high-performing labs and real-world success stories. The goal is to equip forensic leaders with actionable strategies to enhance operational efficiency, secure funding, and deliver justice in an era of rapid technological advancement.

The Crisis and The Catalyst: Understanding the Modern Forensic Lab's Resource Dilemma

Technical Support Center: Troubleshooting Guides and FAQs

This section addresses common operational and technical challenges in forensic laboratories, providing actionable guidance grounded in current research and field expertise.

Frequently Asked Questions (FAQs)

- Q: Our lab is facing severe backlogs, particularly in DNA casework. What are some proven strategies for reducing turnaround times?

- A: Implementing workflow redesign methodologies like Lean Six Sigma has demonstrated significant success. For instance, the Louisiana State Police Crime Laboratory applied these principles and reduced their average DNA turnaround time from 291 days to just 31 days, while increasing throughput from 50 to 160 cases per month [1]. Key steps include value-stream mapping to identify bottlenecks, implementing case triage systems, and cross-training analysts.

- Q: With federal funding becoming less certain, how can labs fund innovation and capacity-building?

- A: Proactively seeking targeted grant programs is crucial. The Capacity Enhancement for Backlog Reduction (CEBR) competitive grants can fund technical projects, such as validating new DNA extraction methods for difficult evidence [1]. The Paul Coverdell Forensic Science Improvement Grants, though facing proposed cuts, can support cross-training analysts across different disciplines and covering accreditation costs, thereby creating a more flexible and resilient workforce [1].

- Q: What is a major emerging technology in digital evidence management?

- A: The eDiscovery industry is witnessing deeper integration of Large Language Models (LLMs) and AI [2]. These technologies are moving beyond simple document review to assist in predictive coding, data classification, and anomaly detection. This is critical for managing the diverse data from modern communication tools like Slack, MS Teams, and WhatsApp, helping legal and forensic teams to strategize more effectively and reduce the time and cost associated with legal discovery [2].

- Q: Our toxicology unit is overwhelmed by drug-driving cases. What are the options for managing this demand?

- A: A multi-pronged approach is necessary. First, develop a Memorandum of Understanding (MOU) with client agencies to define service levels and manage expectations [3]. Second, consider a mixed-model of service delivery: the Scottish Police Authority, for example, uses a combination of in-house capacity and planned outsourcing to meet toxicology demand that is running 20% above agreed levels [3]. For a long-term solution, building a business case to "Invest to Automate" can create a sustainable model to handle projected future demand [3].

Troubleshooting Common Experimental & Operational Challenges

- Challenge: Difficulty detecting benzodiazepines in Drug-Facilitated Sexual Assault (DFSA) cases due to their short metabolic half-life.

- Solution: Shift analytical focus from traditional biological matrices (blood, urine) to the source material itself. A 2025 study demonstrated that Extractive-Liquid Sampling Electron Ionization-Mass Spectrometry (E-LEI-MS) can successfully detect benzodiazepines directly from fortified cocktail residues on a glass surface, simulating a crime scene sample [4]. This ambient ionization technique requires minimal sample preparation and provides results in minutes, overcoming the time-sensitive detection window in biological samples.

- Challenge: The need for rapid, on-site screening of pharmaceuticals for quality control or counterfeit detection.

- Solution: Implement ambient ionization mass spectrometry techniques like E-LEI-MS [4]. This method allows for the direct analysis of 20 different pharmaceutical drugs without any pre-treatment, successfully identifying active pharmaceutical ingredients and excipients. It combines ambient sampling with the high identification power of Electron Ionization, enabling direct comparison with established spectral libraries.

- Challenge: Pressure to adopt new technology without sufficient resources for validation or staffing.

- Solution: Develop a staged implementation and advocacy plan. Use preliminary data from pilot projects, like the Michigan State Police's use of a CEBR grant to validate low-input DNA methods, which yielded a 17% increase in interpretable profiles [1]. Present this data alongside a clear cost-benefit analysis to stakeholders. Furthermore, host lab tours for policymakers to provide firsthand context of operational challenges, a method cited as highly influential in advocacy efforts [1].

The following tables summarize key quantitative data highlighting the pressures on forensic service systems.

Table 1: Forensic Laboratory Performance and Turnaround Time Metrics

| Metric | Baseline Figure | Current/Post-Intervention Figure | Context & Source |

|---|---|---|---|

| DNA Casework Turnaround Time | 291 days (avg) | 31 days (avg) | Louisiana State Police Lab after Lean Six Sigma implementation [1] |

| Sexual Assault Kit Backlog | ~12,000 cases | ~1,700 cases | Connecticut Lab after workflow redesign [1] |

| Toxicology Testing Turnaround | Not specified | 99 days (avg) | Colorado Bureau of Investigation (as of 2025) [5] |

| All Disciplines Turnaround | Up to 2.5 years | 20 days (avg) | Connecticut Division of Scientific Services [5] |

Table 2: Forensic Service Demand and Resource Gaps

| Category | Figure | Context & Source |

|---|---|---|

| Annual Federal Funding Shortfall | $640 Million | Estimated shortfall to meet current demand for U.S. labs (2019 NIJ Needs Assessment) [1] |

| Proposed Coverdell Grant Cut (FY26) | 71% Reduction | Reduction from $35M to $10M in President's proposed budget [1] [5] |

| Toxicology Demand vs. Plan | 20% Over Plan | Scottish Police Authority toxicology testing demand [3] |

| Increase in DNA Turnaround (2017-2023) | 88% Increase | Based on Project FORESIGHT data [1] |

Featured Experimental Protocol

Rapid Screening of Benzodiazepines in Drink Residues using E-LEI-MS

Application: This protocol is designed for the rapid, qualitative screening of benzodiazepines and other illicit substances in drug-facilitated sexual assault (DFSA) investigations or in pharmaceutical quality control, using minimal sample preparation [4].

Methodology

- Principle: Extractive-Liquid Sampling Electron Ionization-Mass Spectrometry (E-LEI-MS) combines ambient sampling of a surface with the high identification power of electron ionization (EI). A solvent extracts analytes directly from the sample surface, and the liquid is aspirated into the high vacuum of the EI source where it is vaporized and ionized, providing results in less than five minutes [4].

- Sample Preparation:

- Standard Solution Analysis: Spot 20 µL of benzodiazepine standard solution (e.g., in MeOH) onto a watch glass and analyze as a dried spot [4].

- Fortified Cocktail Residue Analysis: To simulate a forensic scenario, fortify a cocktail (e.g., gin and tonic) with target benzodiazepines (e.g., clobazam, diazepam, flunitrazepam) at relevant concentrations (e.g., 20 mg/L). Spot 20 µL of the adulterated cocktail onto a watch glass and analyze as a dried spot [4]. No further pre-treatment is required.

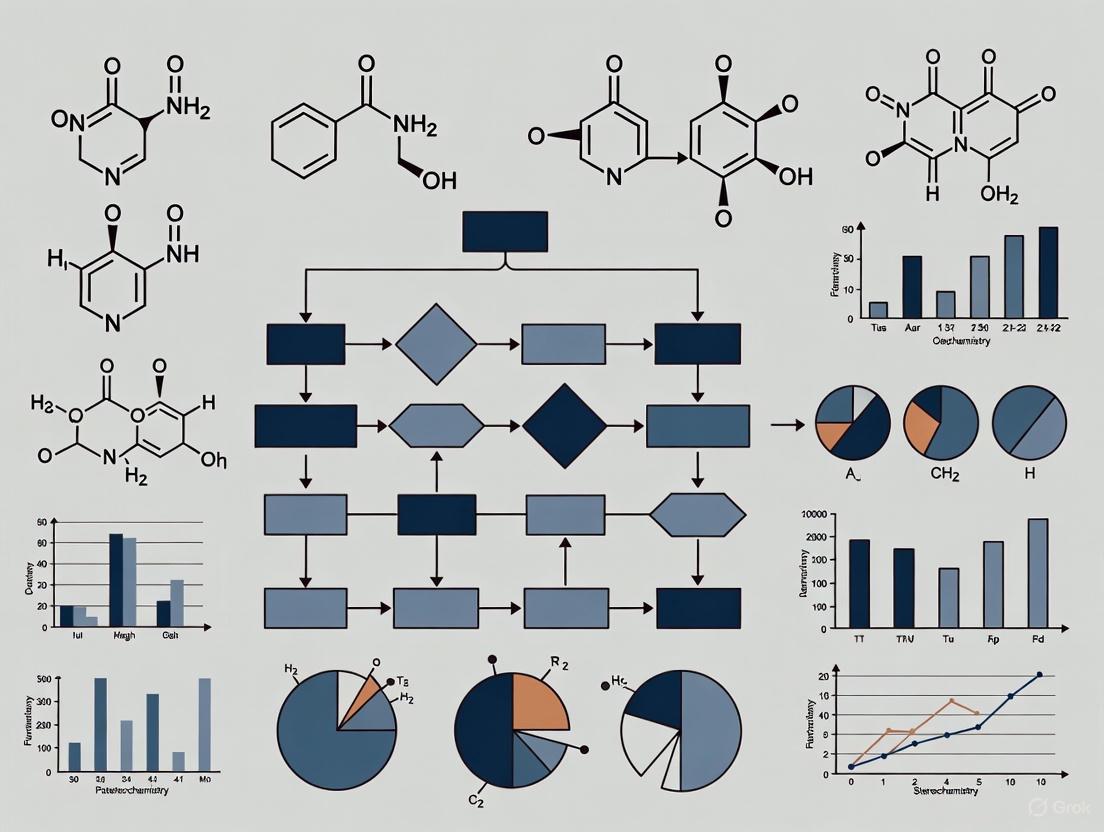

- Instrumentation and Workflow: The following diagram illustrates the core components and process flow of the E-LEI-MS system.

E-LEI-MS System Workflow

- Key Steps:

- System Configuration: The E-LEI-MS apparatus is configured with a solvent-release syringe pump and a coaxial sampling tip positioned above the sample [4].

- Solvent Extraction: A suitable solvent (e.g., acetonitrile) is pumped through the outer capillary onto the sample surface, dissolving the analytes [4].

- Aspiration: The liquid extract is immediately aspirated through the inner capillary, driven by the mass spectrometer's high vacuum [4].

- Vaporization and Ionization: The extract passes through a heated vaporization microchannel (VMC), where it is vaporized before entering the EI source for ionization [4].

- Mass Analysis and Identification: Ions are analyzed by the mass spectrometer (e.g., QqQ or Q-ToF). The resulting spectra are compared against standard EI libraries for compound identification [4].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for E-LEI-MS Forensic Screening

| Item | Function in the Experiment | Specification / Note |

|---|---|---|

| Coaxial Sampling Tip | Core sampling component; outer capillary delivers solvent, inner capillary aspirates the extract. | Inner: 40-50 µm I.D. silica; Outer: 450 µm I.D. peek tube [4]. |

| Vaporization Microchannel (VMC) | Facilitates the vaporization and transport of the liquid extract into the high-vacuum EI source. | A tube passing through a heated transfer line; critical for analyzing medium-high boiling point molecules [4]. |

| Acetonitrile Solvent | Extraction solvent used to dissolve analytes from the sample surface for aspiration. | High purity; chosen for its effectiveness in extracting a wide range of compounds [4]. |

| Electron Ionization (EI) Source | Ionizes the vaporized analyte molecules, producing characteristic fragment patterns. | Allows for direct comparison with extensive, well-established EI spectral libraries [4]. |

| Benzodiazepine Standards | Certified reference materials used for method development, validation, and quality control. | Provided in methanol at various concentrations (e.g., 20, 100, 1000 mg/L) [4]. |

Forensic laboratories across the United States are experiencing significant backlogs across multiple evidence types, leading to delayed justice for victims and impeded criminal investigations. The following tables quantify the current crisis using the most recent available data.

Table 1: Forensic Evidence Turnaround Times by State and Discipline (2025 Data)

| State/Jurisdiction | Sexual Assault Kits | Violent Forensic Biology | Firearms Analysis | Toxicology/Blood Alcohol | Data Source |

|---|---|---|---|---|---|

| Colorado Bureau of Investigation | 570 days (avg) | Not Specified | Not Specified | 99 days (avg) | [5] |

| Tennessee Bureau of Investigation | 17 weeks (approx. 119 days) | 38 weeks (approx. 266 days) | 67 weeks (approx. 469 days) | Not Specified | [6] |

| Connecticut Division of Scientific Services | 27 days (avg) | 20 days (avg across all disciplines) | 35 days (avg) | Not Specified | [5] |

| National Trend (2017-2023) | 88% increase in DNA casework turnaround | 25% increase in crime scene turnaround | Not Specified | 246% increase in post-mortem toxicology | [1] |

Table 2: Backlog Statistics and Case Volume Impact

| Metric | Pre-2025 Data | Current Status (2025) | Context & Impact |

|---|---|---|---|

| Sexual Assault Kit Backlog (Oregon) | Not Specified | 474 kits awaiting testing (as of June 2025) | Testing halted for all property crime DNA evidence until SAK backlog cleared [5] |

| Connecticut Backlog Evolution | 12,000 cases (early 2010s) | Backlog reduced below 1,700 cases | Result of LEAN workflow redesign and sustained investment [5] [1] |

| Tennessee Request Volume | Baseline 2022 | 7% increase in forensic biology requests (2022-2024) | 17% increase at Jackson lab; 4% increase at Knoxville lab (2023-2024) [6] |

| National Funding Shortfall | $640 million annual shortfall (2019 estimate) | Remains critical | Additional $270 million needed to address opioid crisis [1] |

Troubleshooting Guides: Addressing Common Backlog Scenarios

FAQ 1: How can our lab reduce turnaround times for sexual assault kits when facing staffing constraints?

Issue: Processing delays for sexual assault kits exceeding 6-12 months despite mandated testing timelines.

Solution Protocol: Implement a triaged workflow and strategic outsourcing.

- Step 1 – Case Triage Implementation: Establish evidence acceptance protocols prioritizing violent crimes over property crimes during backlog crises [5] [1].

- Step 2 – Strategic Outsourcing: Identify federal grants (Debbie Smith DNA Backlog Grant Program, CEBR) to fund outsourcing of oldest kits to accredited private labs [5].

- Step 3 – Workflow Redesign: Apply Lean Six Sigma principles to DNA processing, as demonstrated by Louisiana State Police, which reduced average turnaround from 291 days to 31 days [1].

Validation Metrics:

- Track monthly kit processing rate

- Monitor average age of oldest untested kit

- Measure percentage of kits processed within 90-day target

FAQ 2: What methodologies effectively address multi-disciplinary backlogs (DNA, firearms, toxicology) simultaneously?

Issue: Growing backlogs across multiple forensic disciplines with limited equipment and personnel.

Solution Protocol: Deploy integrated efficiency methods and cross-training.

- Step 1 – Workflow Analysis: Conduct process mapping for each discipline to identify bottleneck stages using external process improvement methods [6].

- Step 2 – Technology Enhancement: Validate and implement automated DNA extraction systems and probabilistic genotyping software to increase analyst throughput [1] [7].

- Step 3 – Cross-Training Program: Use Coverdell grants to fund cross-training DNA analysts in basic toxicology or drug analysis to create capacity flexibility [1].

Validation Metrics:

- Measure pre- and post-implementation turnaround times by discipline

- Track cases completed per analyst FTE

- Monitor equipment utilization rates

FAQ 3: How can our laboratory secure sustainable funding for capacity expansion?

Issue: Inadequate operational funding leading to growing backlogs and inability to retain staff.

Solution Protocol: Develop a multi-layered funding strategy.

- Step 1 – Federal Grant Optimization: Submit applications for both CEBR competitive grants (technical innovation) and formula grants (capacity building) with October 2025 deadlines [8].

- Step 2 – Regional Partnership Development: Establish multi-jurisdictional funding agreements using Shelby County, TN's $1.5 million regional lab as a model [5] [1].

- Step 3 – Data-Driven Advocacy: Compile laboratory-specific metrics on backlog growth and its impact on case outcomes for legislative briefings [1].

Validation Metrics:

- Track grant application success rate

- Measure percentage of budget from diversified sources

- Monitor staffing retention rates

Experimental Protocols for Backlog Reduction

Protocol 1: Lean Six Sigma Workflow Optimization for DNA Casework

Based on: Louisiana State Police Crime Laboratory implementation (Award #2008-DN-BX-K188) [1]

Objective: Reduce DNA analysis turnaround time by eliminating non-value-added process steps.

Materials:

- Process mapping software or physical workflow boards

- Cross-functional team (analysts, evidence technicians, quality assurance)

- Time-tracking system with granular task categories

Methodology:

- Process Mapping: Document each step from evidence receipt to report issuance, identifying decision points and transfers.

- Bottleneck Identification: Collect time-in-stage metrics to pinpoint major delay causes (e.g., administrative review, technical analysis).

- Waste Elimination: Apply Lean principles to remove redundant steps, batch processing delays, and unnecessary handoffs.

- Workflow Redesign: Create streamlined process with parallel processing where possible and standardized work instructions.

- Implementation & Monitoring: Deploy new workflow, tracking turnaround time weekly during stabilization phase.

Expected Outcomes: Louisiana implementation achieved:

- Turnaround time reduction: 291 days → 31 days

- Throughput increase: 50 → 160 cases/month

- 95% of DNA requests completed within 30 days [1]

Protocol 2: Validation of Low-Input/Degraded DNA Extraction Methods

Based on: Michigan State Police Forensic Science Division CEBR grant project [1]

Objective: Increase successful DNA profile recovery from challenging evidence (touch DNA, degraded samples from cold cases).

Materials:

- Low-template DNA extraction kits (e.g., Promega DNA IQ, Qiagen EZ1)

- Differential extraction kits for sexual assault kits

- Quantitation instrumentation (qPCR)

- Capillary electrophoresis genetic analyzers

- Probabilistic genotyping software (STRmix)

Methodology:

- Sample Selection: Create standardized test samples with varying DNA quantities (0.1-0.01 ng) and degradation levels.

- Extraction Validation: Compare recovery rates across multiple extraction methods using standardized metrics (DNA yield, inhibitor presence, profile completeness).

- PCR Optimization: Adjust amplification cycle number and volume to enhance signal from low-template samples.

- Data Interpretation: Implement probabilistic genotyping to interpret complex mixtures and low-level profiles.

- Implementation: Develop standard operating procedure for applying validated methods to appropriate casework.

Expected Outcomes: Michigan implementation achieved:

- 17% increase in interpretable DNA profiles from complex evidence within 12 months

- Enhanced capability to process previously unsuccessful sexual assault kits [1]

Workflow Visualization: Forensic Evidence Processing

Forensic Evidence Processing Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Technologies for Forensic Backlog Reduction

| Resource/Technology | Function/Application | Implementation Consideration |

|---|---|---|

| Low-Template DNA Extraction Kits | Enhances DNA recovery from limited or degraded samples | Validate for specific sample types; increases successful profile rate by 17% [1] |

| Probabilistic Genotyping Software (STRmix) | Interprets complex DNA mixtures and low-level profiles | Reduces manual review time; requires extensive validation and training [1] [7] |

| Automated Liquid Handling Systems | Standardizes extraction and PCR setup; increases throughput | High initial investment offset by long-term efficiency gains; eligible for CEBR funding [8] |

| Laboratory Information Management System (LIMS) | Tracks evidence, manages workflows, and documents chain of custody | Enables bottleneck identification through process metrics; ensures quality control [7] |

| Rapid DNA Technologies | Provides accelerated processing for triage decisions | Limited to specific sample types; useful for booking stations with legal framework [6] |

| Reference DNA Databases | Supports statistical interpretation of evidence weight | Requires diverse, searchable, and curated populations for accurate statistics [7] |

Article

For researchers, forensic scientists, and laboratory professionals, federal grant programs like the Paul Coverdell Forensic Science Improvement Grants Program and the Debbie Smith Act grants are not merely funding lines; they are the bedrock of operational capacity, innovation, and ultimately, justice. These programs are pivotal in addressing systemic challenges such as evidence backlogs, technological modernization, and workforce training. However, the landscape of federal resource allocation is shifting, presenting a looming threat that could stifle forensic science progress and undermine the reliability of criminal investigations and drug development processes.

The Vital Role of Federal Grants in Forensic Science

Forensic laboratories and medical examiner offices are the nexus where cutting-edge science meets the demands of the justice system. The stability of their funding directly impacts the quality and timeliness of their output.

Paul Coverdell Forensic Science Improvement Grants Program Administered by the Bureau of Justice Assistance (BJA), the Coverdell Program is a unique and flexible source of federal support. It is the only federal grant program that also funds non-DNA forensic disciplines, making it indispensable for the holistic functioning of a crime lab [9]. Grants are awarded to states and units of local government with a mandate to use funds for one or more of six specific purposes [10] [11]:

- Improving the quality and timeliness of forensic science and medical examiner services.

- Eliminating backlogs in the analysis of forensic evidence (e.g., firearms, toxicology, latent prints, controlled substances).

- Training, assisting, and employing forensic laboratory personnel to eliminate backlogs.

- Addressing emerging forensic science issues (e.g., contextual bias, statistics) and technology (e.g., automation, new instrumentation).

- Educating and training forensic pathologists.

- Funding medicolegal death investigation systems to facilitate accreditation and certification.

Quantifiable Impact of Sustained Funding The effectiveness of these programs is not theoretical; it is demonstrated by clear performance metrics. The table below summarizes the tangible impact of Coverdell Program funds over a recent decade-long period.

Table 1: Measurable Impact of Coverdell Program Funding (FY2011-FY2021) [9] [11]

| Performance Metric | Impact |

|---|---|

| Backlogged Cases Analyzed | Over 1.8 million |

| Agencies Reducing Backlogs | More than 350 |

| Forensic Personnel Trained | More than 19,000 |

| Medical Examiners/Coroners Trained | More than 2,000 (in FY2021 alone) |

| Pathologists Trained | More than 40 (in FY2021 only) |

| Agencies Improving Timeliness | More than 400 |

| Agencies Obtaining Initial Accreditation | More than 20 (between FY2017-FY2021) |

| Controlled Substances Identified | In more than 85% of seized drug cases tested (FY2021) |

The Looming Threat: Resource Reallocation and Its Consequences

The forensic science community is vulnerable to shifts in federal spending priorities. Recent events in adjacent fields illustrate the potential fallout that could occur if programs like Coverdell and Debbie Smith face funding cuts or resource dilution.

Precedent from Clinical Research Significant funding cuts to the National Institutes of Health (NIH) have led to a reallocation of resources away from critical areas like vaccine development and have caused delays in regulatory review times at the FDA. This has created leadership voids in international collaborations, with implications for global scientific standards [12]. This scenario is a cautionary tale for forensic science; similar cuts would directly impair a lab's ability to operate efficiently and meet its statutory duties.

The Strain of Accelerated Programs New, high-priority federal programs can also inadvertently strain existing resources. For instance, the FDA's Commissioner's National Priority Voucher (CNPV) pilot program, which aims to reduce drug review times dramatically, has raised concerns about diverting resources from established review programs. Experts have questioned the logistical feasibility without impacting other critical functions, noting that the entire resource cost may need to be absorbed internally through reallocation [13]. This demonstrates how well-intentioned initiatives can create competitive pressure for limited resources, threatening the stability of foundational programs.

Troubleshooting Guide: Navigating a Constrained Funding Environment

In the face of funding instability, laboratories must adopt proactive strategies to maintain operational integrity and advance their scientific missions.

FAQ: Addressing Common Funding Challenges

Q: Our lab is facing a growing backlog of forensic evidence with stagnant funding. What are the most effective strategies for resource optimization?

- A: Implement advanced predictive modeling and AI-driven resource allocation. By analyzing past case data, labs can forecast staffing and equipment needs, creating a data-driven justification for funding requests. Furthermore, machine learning can automatically scan and prioritize cases by complexity and evidence type, ensuring that limited resources are directed toward the most critical work first [14].

Q: How can we improve the success rate of our Coverdell grant applications?

- A: Focus on measurable objectives and alignment with emerging priorities. Successful applications clearly demonstrate how funds will reduce backlogs, improve timeliness, or build capacity. Emphasizing projects that address national crises, such as the opioid epidemic, or that implement new technologies to combat contextual bias, can make an application more competitive [10] [9]. Accreditation is also a key factor; many grants require labs to be accredited or actively pursuing it.

Q: What is the most critical guardrail for implementing new technologies like AI when grant funding is at risk?

- A: Human verification and a robust audit trail are non-negotiable. AI systems should be viewed as tools that require careful human oversight. Any AI model used in forensic analysis must have a documented audit trail showing the path from input to conclusion. This ensures scientific integrity and maintains the credibility of evidence in court [14].

Q: How can a lab maintain independence and avoid bias when funding sources create institutional pressures?

- A: Advocate for and adhere to structural independence. Best practices in forensic science have long recommended that forensic labs be independent from prosecutorial and law enforcement control to mitigate conscious and unconscious bias. Ensuring that defense attorneys have equal access to forensic services and the ability to challenge results is essential for maintaining scientific integrity and public trust [15].

The Scientist's Toolkit: Essential Solutions for Modern Forensic Research

To achieve more with constrained resources, laboratories must strategically invest in and utilize a core set of modern research reagents and solutions.

Table 2: Key Research Reagent Solutions for Forensic Laboratories

| Solution | Primary Function in Forensic Context |

|---|---|

| High-Throughput Automation | Automates repetitive sample processing tasks (e.g., DNA extraction, drug screening), dramatically increasing lab capacity and reducing manual errors [10] [9]. |

| Statistical Software & AI Models | Provides objective, data-driven analysis of complex evidence patterns; used for prioritizing casework, assessing evidence, and mitigating contextual bias [10] [14]. |

| Advanced Instrumentation | Enables the identification and quantification of novel synthetic drugs and trace evidence with greater sensitivity and specificity than older equipment [10] [9]. |

| Laboratory Information Management System (LIMS) | Tracks evidence from intake to disposal, ensuring chain of custody integrity and providing data for performance metrics and accreditation audits. |

| Accreditation & Certification Support | Directly funds costs associated with achieving and maintaining ASCLD/LAB accreditation and personnel certification, which is often a grant requirement and a marker of quality [10] [11]. |

Visualizing the Forensic Grant Application and Implementation Workflow

Successfully securing and utilizing federal grants requires a methodical approach. The diagram below outlines the critical path from identification of a need to the sustainable implementation of funded projects.

Grant Application and Implementation Workflow

Strategic Protocol for Implementing AI-Driven Resource Allocation

As grant funding becomes more competitive, leveraging technology to optimize existing resources is a key survival strategy. The following protocol provides a methodology for integrating AI into lab operations.

Protocol Title: Implementation of a Predictive Modeling System for Forensic Casework Prioritization and Resource Allocation.

Objective: To systematically reduce case turnaround times and optimize staff and equipment utilization by deploying a machine learning model that predicts case processing requirements.

Materials:

- Laboratory Information Management System (LIMS) with historical case data.

- Secure computing environment for data analysis.

- Statistical software or programming language (e.g., R, Python with scikit-learn).

- Cross-functional team (lab analysts, IT specialists, management).

Methodology:

- Data Extraction and Feature Engineering: Export at least three years of historical case data from the LIMS. Key features should include: case type (e.g., controlled substance, DNA), evidence complexity, submitting agency, requestor type (e.g., law enforcement, public defender), date submitted, and analyst hours required.

- Model Training and Validation: Using a supervised learning approach (e.g., a regression model), train the algorithm to predict the "analyst hours required" based on the input features. Reserve a portion of the historical data (e.g., the most recent 20%) for model validation to test its predictive accuracy.

- Integration and Human-in-the-Loop Workflow: Integrate the validated model into the new case intake process. The model should assign a predicted resource score to each new case. However, this score must be reviewed and confirmed by a senior analyst or lab manager before being used for scheduling, ensuring human oversight and accounting for unique case factors not captured in the data [14].

- Performance Monitoring: Continuously monitor the model's performance by comparing predicted versus actual processing times. Establish a quarterly review to retrain the model with new data to prevent performance drift and maintain accuracy.

Expected Outcome: Labs implementing this protocol can expect a more dynamic and efficient allocation of resources, leading to a quantifiable reduction in overall case backlogs and improved timeliness for high-priority evidence, thereby strengthening arguments for continued funding.

Forensic crime labs and pharmaceutical research facilities are facing a critical human capital shortage that threatens their operational viability. Across the United States, forensic laboratories are "drowning in evidence" with severe backlogs delaying justice for victims and derailing criminal investigations [5]. Simultaneously, pharmaceutical and research laboratories are grappling with significant talent shortages, particularly in STEM and digital roles, threatening to slow progress in research and innovation [16]. This perfect storm of staff burnout, training gaps, and private-sector competition represents an existential challenge to the criminal justice system and drug development pipeline that demands strategic resource allocation and technology implementation.

Table: Impact Assessment of Human Capital Shortages Across Laboratory Sectors

| Sector | Staffing Challenge | Operational Impact | Case Processing Delays |

|---|---|---|---|

| Forensic Crime Labs | Shortages of qualified scientists; low pay compared to private sector [5] | Evidence backlogs; difficult prioritization decisions [5] | Sexual assault kits: 570-day average turnaround in Colorado [5] |

| Pharmaceutical R&D | Talent shortages in STEM and digital roles; aging workforce [16] | Declining R&D productivity; rising costs per new drug approval [17] | Success rate for Phase 1 drugs plummeted to 6.7% in 2024 [17] |

| Clinical Laboratories | 28% of lab professionals aged 50+ planning retirement in 3-5 years [18] | 14% admit high-risk errors; 22% report low-risk errors [18] | Temporary lab closures due to understaffing [18] |

Diagnosing the Human Capital Shortage

Staff Burnout and Retention Crisis

The human capital shortage is exacerbated by critical levels of staff burnout across laboratory sectors. A recent survey of over 1,000 laboratory leaders revealed that 70% are worried about staff retention, while 60% are concerned about talent acquisition [19]. In forensic laboratories, the immense pressure on analysts to maintain perfect performance amidst overwhelming caseloads contributes significantly to burnout. As one official noted, "We have to be absolutely perfect, and if you have something that isn't perfect, that can be a career ruiner. That is a lot of pressure" [5].

The consequences of this burnout are tangible and concerning. Laboratory professionals report making high-risk errors, including biohazard exposure or reporting incorrect test results, while others worry about making errors due to excessive workloads [18]. Perhaps most alarming is that 5% of laboratory professionals report their labs have closed for entire shifts due to understaffing, delaying test results and losing vital revenue [18].

Training Gaps and Expertise Shortfalls

The skills gap represents another critical dimension of the human capital crisis. An overwhelming 78% of lab leaders express concern about the skills and expertise gap in their organizations, with 95% believing that prioritizing upskilling is crucial for lab innovation [19]. This challenge is particularly acute in forensic laboratories, where training new analysts can take months or even years, making it difficult to quickly fill critical positions and retain experienced staff [5].

The pharmaceutical industry faces parallel challenges, with companies struggling to find talent with specialized expertise in digital and personalized medicine, even as changing workforce expectations add another layer of complexity for companies trying to attract and retain top talent [16]. Without addressing these training challenges, the industry risks stalling innovation and falling behind in a rapidly evolving landscape.

Private-Sector Competition

Private-sector competition is draining talent from public forensic laboratories and research institutions. Forensic experts note that "low pay is also a challenge, with some analysts opting for private-sector jobs that offer higher salaries and better benefits" [5]. This talent migration creates a vicious cycle where remaining staff face increased workloads, leading to further burnout and attrition.

In the pharmaceutical sector, companies are not only competing with each other for limited specialized talent but also with the broader technology sector that can offer more attractive compensation packages for data science and AI expertise [16]. This intersection of competition creates critical shortages in precisely the areas most needed for modern laboratory innovation.

Strategic Resource Allocation Solutions

Technology Implementation Framework

Strategic investment in laboratory technologies represents the most promising approach to mitigating human capital shortages. Automation and artificial intelligence are topping lists of laboratory trends for 2025, with their role in handling increased lab workloads and improving patient care becoming increasingly critical [18]. A survey of laboratory professionals found that 95% believe automation technologies will improve their ability to deliver patient care, with 89% agreeing that automation is vital to keep up with demand [20].

Table: Technology Solutions for Human Capital Challenges

| Technology Solution | Targeted Human Capital Challenge | Implementation Benefit | Efficiency Impact |

|---|---|---|---|

| Laboratory Automation Systems [18] | Staff burnout from repetitive tasks | Reduces manual aliquoting and pre-analytical steps | Consolidates 25 tasks to reduce hours of work to minutes [18] |

| AI-Powered Data Analytics [17] | Training gaps in complex analysis | Identifies potential workflow bottlenecks | Enables proactive trial design adjustments, saving time/resources [17] |

| Digital Laboratory Management Systems [21] | Expertise shortage in specialized functions | Streamlines data management and regulatory compliance | Creates efficiencies allowing teams to focus on advancing therapies [21] |

| Remote Monitoring Tools [22] | Geographic talent limitations | Enables remote work options for specialized staff | Facilitates earlier detection and tailored interventions [22] |

Training and Development Programs

Addressing the expertise gap requires strategic investment in continuous training and development. Forward-thinking companies are getting creative, "partnering with universities to create specialized training programs" while upskilling current employees [16]. The integration of AI to handle repetitive tasks can free up human capital to focus on big-picture projects that drive organizational success [16].

In forensic laboratories, the implementation of Project FORESIGHT provides a benchmarking framework that allows laboratories to evaluate their performance relative to peer institutions, identifying best practices for resource allocation and operational efficiency [23]. This data-driven approach helps laboratories "measure, preserve what works, and change what does not" through detailed analysis of casework, personnel allocation, and financial information [23].

Technical Support Center: Troubleshooting Human Capital Challenges

Frequently Asked Questions

Q: How can our laboratory maintain operational capacity when 28% of our staff are nearing retirement? A: Implement a phased approach combining automation for repetitive tasks [18], upskilling of junior staff [16], and utilization of remote expert consultation through digital platforms [22]. Focus on capturing institutional knowledge through structured mentorship programs before senior staff retire.

Q: What strategies effectively reduce staff burnout in high-pressure forensic environments? A: Successful laboratories combine workflow optimization through automation [18], realistic caseload management with clear prioritization protocols [5], and investment in error-reduction technologies that alleviate the perfection pressure on analysts [5].

Q: How can public laboratories compete with private-sector compensation packages? A: While direct salary competition is challenging, public laboratories can emphasize mission-oriented recruitment, implement flexible work arrangements, provide advanced training opportunities, and leverage cutting-edge technologies that make the work environment more engaging and professionally rewarding [16] [5].

Q: What specific technologies provide the best return on investment for understaffed laboratories? A: Based on industry surveys, automation systems that handle manual aliquoting and pre-analytical steps [18], AI-powered co-scientists that optimize complex workflows [18], and digital trial management systems that maintain audit readiness [21] demonstrate the most significant operational impacts.

Troubleshooting Guides

Problem: Evidence Backlogs Increasing Despite Staff Overtime

- Step 1: Conduct workflow analysis using Project FORESIGHT methodology to identify specific bottlenecks [23]

- Step 2: Implement strategic prioritization protocol for case types (e.g., violent crimes first) [5]

- Step 3: Deploy automation for high-volume, low-complexity testing processes [18]

- Step 4: Utilize outsourcing partnerships for specific case types to manage volume spikes [5]

Problem: High Error Rates Among Junior Staff

- Step 1: Implement AI-powered decision support systems to reduce cognitive load [17]

- Step 2: Establish structured mentorship program pairing junior and senior staff [18]

- Step 3: Develop simulation-based training using synthetic patient cases [22]

- Step 4: Introduce progressive responsibility framework with oversight checkpoints

Problem: Recruitment Failure for Specialized Positions

- Step 1: Expand recruitment geographically with remote work options [16]

- Step 2: Develop university partnerships to create specialized training pipelines [16]

- Step 3: Highlight advanced technologies and meaningful mission in recruitment materials

- Step 4: Implement referral bonuses and retention incentives for existing staff

Essential Research Reagent Solutions for Laboratory Efficiency

Table: Key Research Reagents and Technologies for Operational Efficiency

| Reagent/Technology | Function | Impact on Human Capital |

|---|---|---|

| Automated Nucleic Acid Extraction Systems [18] | Reduces manual processing time for molecular testing | Alleviates staff burden from repetitive manual tasks; improves reproducibility |

| AI-Powered Laboratory Information Management Systems (LIMS) [20] [19] | Integrates data management, inventory tracking, and regulatory compliance | Reduces administrative burden on technical staff; minimizes documentation errors |

| Remote Monitoring Platforms [22] | Enables real-time equipment monitoring and data collection | Allows specialized staff to manage multiple sites remotely; increases flexibility |

| Point-of-Care Testing Technologies [20] [22] | Decentralizes testing to point of need | Reduces central lab workload; accelerates turnaround times |

| Machine Learning Algorithms for Data Analysis [17] | Identifies patterns in complex datasets beyond human capability | Augments staff analytical capabilities; reduces interpretation time |

| Electronic Trial Master Files (eTMF) [21] | Digital management of regulatory documentation | Streamlines compliance processes; reduces administrative staff requirements |

The human capital shortage facing forensic and research laboratories represents a critical challenge that demands systematic approaches to resource allocation and technology implementation. Laboratories that successfully navigate this crisis will be those that strategically leverage automation for repetitive tasks, implement AI-powered decision support systems, develop continuous upskilling programs, and create engaged work environments that retain specialized talent. The convergence of these strategies offers the potential not only to address immediate staffing challenges but to build more resilient, efficient laboratory operations capable of meeting evolving scientific and judicial demands.

Through the strategic implementation of the troubleshooting guides, technological solutions, and resource allocation frameworks outlined in this article, laboratory managers can transform the human capital crisis from an existential threat into an opportunity for operational transformation and enhanced scientific impact.

The integration of advanced technologies like DNA analysis and digital forensics has revolutionized forensic science, creating a powerful double-edged sword for modern crime laboratories. While these tools offer unprecedented capabilities for solving crimes, they also introduce significant challenges in implementation, resource allocation, and workflow management that can strain laboratory operations. Forensic labs worldwide are experiencing mounting pressure as technological advancements outpace their capacity, with two key federal grant programs supporting state and local forensic labs now facing potential steep cuts [5]. This resource paradox forms the core challenge in forensic science today: as analytical capabilities grow more sophisticated, the demands on laboratory infrastructure, personnel, and funding intensify correspondingly. This technical support center addresses these challenges through practical troubleshooting guidance and strategic insights for researchers, scientists, and professionals navigating this complex landscape.

Troubleshooting Guides

DNA Analysis: STR Profile Troubleshooting

Short Tandem Repeat (STR) analysis is a foundational technique for forensic DNA profiling, yet its four-step workflow (extraction, quantification, amplification, and separation/detection) presents multiple potential failure points that can compromise results [24].

Common Issue: Incomplete or Skewed STR Profiles

- Problem: The STR profile is incomplete, shows allelic dropouts, or has poor intra-locus balance.

- Potential Cause: PCR inhibition from contaminants like hematin (from blood samples) or humic acid (from soil).

- Solution:

- Use inhibition-resistant DNA polymerases or specialized extraction kits with additional wash steps to remove contaminants [24].

- Ensure complete drying of DNA samples post-purification to prevent ethanol carryover, which can inhibit amplification.

- Verify DNA quantification using multiple methods (e.g., UV spectroscopy and fluorometric analysis) to ensure accurate template amounts [25].

Common Issue: Poor Peak Morphology and Signal Intensity

- Problem: Broad peaks, reduced signal intensity, or elevated baseline noise in capillary electrophoresis.

- Potential Cause: Degraded formamide used for sample denaturation.

- Solution:

- Use high-quality, deionized formamide and minimize its exposure to air to prevent degradation.

- Avoid repeated freeze-thaw cycles of formamide aliquots.

- Ensure proper storage conditions and use fresh formamide for each run [24].

Digital Forensics: Evidence Collection & Preservation

Digital evidence is increasingly crucial in investigations but presents unique challenges compared to physical evidence, as it can be easily manipulated, removed, or hidden without visible traces [26].

Common Issue: Broken Chain of Custody

- Problem: Digital evidence is rendered inadmissible in court due to improper documentation.

- Solution:

- Use standardized evidence collection forms with timestamps and signatures for every transfer.

- Implement write-blockers during imaging to preserve evidence integrity.

- Utilize case management software with comprehensive audit trails [27].

Common Issue: Encryption and Locked Devices

- Problem: Inability to access encrypted drives or locked smartphones containing critical evidence.

- Solution:

- Employ trusted decryption tools or leverage cloud backups where legally permissible.

- Work through legal channels for password recovery via court orders.

- Maintain knowledge of legitimate bypass methods for mobile devices (e.g., GrayKey, Magnet AXIOM) [27].

Rapid DNA Implementation

Common Issue: Integration with Existing Workflows

- Problem: Rapid DNA technologies create bottlenecks when incorporated into traditional laboratory workflows.

- Solution:

- Conduct workflow analysis before implementation to identify potential integration points.

- Implement parallel processing streams for casework and database samples.

- Develop validation protocols specific to rapid technology characteristics.

Frequently Asked Questions (FAQs)

FAQ 1: What are the most significant resource challenges facing forensic laboratories today?

Forensic laboratories face a triple threat of increasing demand, limited resources, and outdated technology [5]. Specific challenges include:

- Federal Funding Uncertainty: The Paul Coverdell Forensic Science Improvement Grants Program faces a proposed 71% cut (from $35 million to $10 million), while the Debbie Smith DNA Backlog Grant Program is funded below its authorized cap [5].

- Staffing Shortages: Low pay compared to private sector leads to difficulty retaining experienced staff. Training new analysts can take months or years [5].

- Backlog Accumulation: Labs are forced to make difficult prioritization decisions, such as halting DNA analysis for property crimes to focus on sexual assault kits [5].

FAQ 2: How can laboratories improve DNA quantification accuracy?

Accurate DNA quantification is critical for downstream success. Implement these practices:

- Always Verify Concentrations: Even commercially prepared DNA should be re-quantified in your laboratory, as listed concentrations often differ from actual measurements [25].

- Employ Multiple Methods: Use both UV spectroscopy (ensuring A260 readings between 0.1-0.999) and absolute quantitation methods like TaqMan RNase P assay [25].

- Prevent Evaporation: Ensure proper sealing of quantification plates using recommended adhesive films to maintain sample integrity [24].

FAQ 3: What funding resources are available for DNA capacity building?

The DNA Capacity Enhancement for Backlog Reduction (CEBR) Program provides critical funding to state and local forensic labs to:

- Process, analyze, and interpret forensic DNA evidence more effectively

- Support personnel hiring and training

- Upgrade technology and equipment to streamline workflows

- Enhance CODIS database capabilities [8]

FY2025 funding opportunities are currently open with deadlines in October 2025 [8].

FAQ 4: What are the key differences between the CEBR and SAKI programs?

While both address forensic capacity, they have distinct focuses:

Table: Forensic Program Comparison

| Program | Full Name | Primary Focus | Funding Application |

|---|---|---|---|

| CEBR | DNA Capacity Enhancement for Backlog Reduction [8] | Processing all types of DNA evidence (homicide, burglary, etc.); laboratory capacity building [8] | Laboratory infrastructure, personnel, equipment [8] |

| SAKI | Sexual Assault Kit Initiative [8] | Testing, tracking, and investigating sexual assault cases; victim-centered approaches [8] | Comprehensive investigation support beyond laboratory work [8] |

DNA Diagnostics Market Data

The growing demands on forensic laboratories are reflected in the expanding DNA diagnostics market, which demonstrates both the opportunities and financial pressures facing the field.

Table: DNA Forensics Market Outlook

| Market Segment | 2023 Value | 2024 Value | 2025 Projection | 2030 Projection | CAGR (2025-2030) |

|---|---|---|---|---|---|

| Overall DNA Diagnostics Market | $12.3 billion [28] | $13.3 billion [28] | - | $21.2 billion [28] | 9.7% [28] |

| DNA Forensics Market | - | - | $3.3 billion [29] | $4.7 billion [29] | 7.7% [29] |

Table: DNA Forensics Market Application Analysis

| Application Segment | Key Trends | Technology Drivers |

|---|---|---|

| Infectious Disease Diagnostics | Largest application segment; boosted by rapid pathogen detection needs [28] | PCR, NGS [28] |

| Oncology | Growing adoption of liquid biopsy and tumor DNA profiling [28] | NGS, microarrays [28] |

| Genetic Testing | Increasing use in prenatal and newborn screening [28] | NGS, PCR [28] |

| Forensic and Identity Testing | Strengthening legal and security applications globally [28] | STR, Capillary Electrophoresis, NGS [28] |

Essential Research Reagent Solutions

Successful forensic analysis requires high-quality reagents and materials. The following table outlines essential components for DNA analysis workflows:

Table: Essential Research Reagents for DNA Analysis

| Reagent/Material | Function | Key Considerations |

|---|---|---|

| PCR Inhibitor Removal Kits | Remove contaminants like hematin and humic acid during extraction [24] | Select kits with additional wash steps; validate for specific sample types |

| Quantification Standards | Provide reference for accurate DNA concentration measurement [25] | TaqMan RNase P standards available at predetermined concentrations (0.6-12.0 ng/μL) |

| Deionized Formamide | Denatures DNA for proper separation during capillary electrophoresis [24] | Use high-quality grades; minimize air exposure; avoid freeze-thaw cycles |

| Fluorescent Dye Sets | Label STR markers for detection [24] | Use manufacturer-recommended sets for specific chemistries to avoid artifacts |

| Electroporation Buffers | Facilitate intracellular DNA delivery in advanced applications [30] | Optimize for specific tissue types; ensure compatibility with delivery parameters |

Experimental Protocols

STR Analysis Workflow

The standard STR analysis protocol involves four critical phases that must be meticulously executed to generate reliable, court-admissible results.

Phase 1: DNA Extraction

- Protocol: Use silica-based membrane columns or magnetic bead systems for optimal yield.

- Critical Step: Incorporate inhibitor removal techniques through additional wash steps, particularly for challenging samples like blood or soil-contaminated evidence [24].

- Quality Control: Ensure complete drying of pellets to prevent ethanol carryover, which can inhibit downstream amplification.

Phase 2: DNA Quantification

- Protocol: Employ quantitative PCR (qPCR) methods like the TaqMan RNase P assay for accurate measurement of amplifiable DNA.

- Critical Step: Always verify DNA concentration before use, even for commercially prepared samples, as listed concentrations often differ from actual measurements [25].

- Troubleshooting: For UV spectroscopy, dilute samples to ensure A260 readings fall between 0.1-0.999 (approximately 4-50 ng/μL) for valid measurements.

Phase 3: DNA Amplification

- Protocol: Use calibrated pipettes and thoroughly vortex primer pair mixes before setting up amplification reactions.

- Critical Step: Maintain consistent thermal cycling conditions and reagent ratios across all samples.

- Troubleshooting: If allelic dropout occurs, check primer mix concentration and template DNA quantity [24].

Phase 4: Separation and Detection

- Protocol: Use fresh, high-quality formamide for sample denaturation and the manufacturer-recommended dye sets for your specific chemistry.

- Critical Step: Minimize formamide exposure to air to prevent degradation that causes peak broadening [24].

- Quality Control: Include appropriate size standards in each capillary electrophoresis run.

Electroporation-Mediated DNA Delivery

For advanced applications requiring enhanced intracellular DNA delivery, electroporation represents a promising technological advancement, though it introduces additional complexity.

Protocol Overview: Electroporation (EP) mediates intracellular DNA delivery through brief application of electrical fields to target cells, inducing transient membrane permeability that allows DNA uptake [30].

Step-by-Step Methodology:

- DNA Administration: Administer DNA to target tissue (e.g., skeletal muscle, skin, tumor) via direct injection.

- Electrode Configuration: Position electrode arrays to ensure electrical fields colocalize precisely with DNA distribution sites.

- Parameter Optimization: Apply electrical fields with carefully optimized parameters (wave type, amplitude, duration, number, frequency) tailored to specific tissue types.

- Viability Maintenance: Use appropriate electrical conditions that maintain cell viability while enabling efficient DNA uptake.

Applications:

- Therapeutic Proteins: Sustained endogenous expression of immunomodulatory cytokines or growth factors [30].

- DNA Vaccination: Enhanced cellular and humoral immune responses across diverse antigens [30].

- RNA Interference: Long-term down-regulation of target genes through expressed short-hairpin RNA [30].

Advanced forensic technologies indeed represent a double-edged sword, offering remarkable analytical capabilities while introducing significant implementation challenges. The path forward requires strategic resource allocation that balances technological adoption with operational sustainability. Laboratories must prioritize workforce development, pursue available funding mechanisms like the CEBR program, implement rigorous troubleshooting protocols, and carefully validate new technologies before full integration. By acknowledging both the promises and pitfalls of these technological tides, forensic facilities can better navigate the complex currents of modern forensic science, turning potential obstacles into opportunities for enhanced justice delivery.

Building a Roadmap: Methodologies for Strategic Technology Implementation and Resource Planning

Forensic crime labs are at a critical juncture. As demand for services grows and technology rapidly advances, laboratories face mounting pressure to modernize while contending with significant backlogs and potential federal funding cuts [31]. The industry, valued at $3.7 billion in the US, is experiencing a wave of technological innovation, yet many labs lack a structured process for integrating these new tools effectively [32]. This article provides a step-by-step framework for forensic researchers and scientists to systematically evaluate and prioritize new technologies, ensuring that limited resources are allocated to solutions that offer the greatest impact on casework and operational efficiency.

Phase 1: Needs Assessment & Initial Evaluation

Step 1: Define the Problem and Requirements

Before evaluating any specific technology, clearly articulate the problem it will solve. Is it to reduce the turnaround time for DNA analysis, improve the accuracy of digital evidence examination, or address a specific type of backlog such as controlled substance testing? [32] [31].

- Input: Gather data from internal stakeholders (lab analysts, quality assurance managers, prosecutors) to identify pain points.

- Output: A problem statement and a list of core technical and operational requirements (e.g., must process 50 samples per day, must integrate with existing Laboratory Information Management System (LIMS), must comply with FBI quality standards).

Step 2: Market Scanning and Vendor Identification

Conduct a broad scan of the available technologies that address your defined problem. This involves researching vendors, attending industry conferences, and consulting scientific publications.

Table: Key Forensic Technology Market Segments

| Technology Segment | Example Applications | Market Context |

|---|---|---|

| Forensic Biology | DNA sequencing, STR analysis | Largest product segment in the forensic services market [32]. |

| Controlled Substances | Drug identification, chemical analysis | Accounts for over one-third of industry revenue [32]. |

| Digital Evidence | Mobile device forensics, data recovery | Demand has risen sharply with technological advancement [31]. |

| Portable Analytics | Portable DNA analyzers, field test kits | Emerging trend to support faster, on-site analysis [32]. |

Phase 2: Technical Evaluation & Experimental Protocol

Once a potential technology is identified, a rigorous, evidence-based evaluation is crucial. The following protocol provides a methodology for testing a new analytical instrument, such as a portable DNA analyzer.

Experimental Protocol: Evaluating a Portable DNA Analyzer

Objective: To determine the performance, reliability, and operational impact of a new portable DNA analyzer compared to existing laboratory-based systems.

1. Hypothesis The portable DNA analyzer will provide DNA profiles of comparable quality to the standard laboratory system, with a significant reduction in processing time and required user steps, without compromising data integrity for database entry.

2. Materials and Reagents

Table: Research Reagent Solutions & Essential Materials

| Item | Function / Explanation |

|---|---|

| Reference DNA Samples | Commercially available, standardized samples with known profiles to establish baseline accuracy and reproducibility. |

| Swabs & Collection Kits | To collect mock evidence from controlled surfaces, testing the instrument with real-world sample types. |

| Lysis Buffer & Purification Kits | Reagents for breaking down cells and isolating DNA, critical for evaluating the instrument's integrated vs. manual prep workflow. |

| PCR Master Mix | Pre-mixed reagents for the Polymerase Chain Reaction (PCR) to amplify DNA; tests compatibility with the device's micro-fluidic chambers. |

| Electrophoresis Standard | A standard DNA fragment size marker to validate the accuracy of the instrument's internal sizing analysis. |

| Positive & Negative Controls | Validates that the instrument and reagents are functioning correctly and detects any contamination. |

3. Methodology

- Sample Preparation: Process 50 mock evidence samples using the portable device's protocol. In parallel, process the same 50 samples using the standard laboratory protocol.

- Data Collection: For each sample, record the following metrics:

- Total Processing Time: Time from sample introduction to final profile generation.

- Hands-On Time: Active time required by the analyst.

- Profile Quality Metrics: Such as peak height, balance, and signal-to-noise ratio.

- Accuracy: Percentage of alleles correctly called compared to the known reference.

- Success Rate: Percentage of samples that produced a database-acceptable profile.

- Data Analysis: Perform a statistical comparison (e.g., t-test) of processing times and profile quality metrics between the two systems.

Technology Evaluation Workflow

The following diagram visualizes the core experimental and decision-making workflow for evaluating a new technology.

Phase 3: Acquisition Prioritization & Resource Allocation

The final phase involves translating experimental data into a strategic decision, justifying the investment to stakeholders.

Step 1: Quantitative Scoring with a Prioritization Matrix

Create a weighted scoring matrix to objectively compare multiple technologies or a single technology against the status quo.

Table: Technology Prioritization Scorecard

| Evaluation Criterion | Weight | Score (1-5) | Weighted Score | Comments / Evidence |

|---|---|---|---|---|

| Technical Performance | 30% | Based on experimental results (e.g., 98% accuracy achieved in validation study). | ||

| Impact on Backlog | 25% | Estimated 40% reduction in processing time per sample. | ||

| Total Cost of Ownership | 20% | Includes purchase price, maintenance, and consumables over 5 years. | ||

| Ease of Implementation | 15% | Assessment of training needs and LIMS integration complexity. | ||

| Regulatory Compliance | 10% | Alignment with FBI QAS standards and ISO 17025 requirements. | ||

| Total Score | 100% |

Step 2: Build a Business Case for Acquisition

Synthesize your findings into a compelling business case that addresses the specific challenges and opportunities in the forensic context.

- Frame the Problem: Start with data on current backlogs or inefficiencies [31].

- Present the Solution: Summarize the experimental results that prove the technology's value.

- Justify the Cost: Calculate the Return on Investment (ROI). This isn't just financial; it includes the value of reducing casework delays, which stalls prosecutions and impacts justice [31].

- Propose an Implementation Plan: Outline a rollout strategy, including training, validation, and phased integration.

Resource Allocation Logic

The final acquisition decision must balance proven performance with strategic resource allocation, a logic flow captured in the diagram below.

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During the validation of a new DNA sequencer, we are observing inconsistent results between runs. What are the first steps we should take to troubleshoot this?

- A1: Begin by systematically isolating variables.

- Reagent Integrity: Check the lot numbers and expiration dates of all reagents. Run the test with a fresh, unopened set of buffers and master mix.

- Instrument Calibration: Verify that the instrument's calibration is current and meets the manufacturer's specifications. Run all recommended diagnostic and quality control protocols.

- Environmental Controls: Confirm that the laboratory environment (temperature, humidity) is stable and within the operating parameters for the instrument.

- Sample Quality: Ensure the input DNA samples for validation are of consistent quality, quantity, and purity. Re-extract samples using a standardized protocol if necessary.

Q2: Our lab is considering a new, automated drug analysis system. How can we build a robust business case to secure funding, especially with potential federal grant cuts? [31]

- A2: Focus on a data-driven case that demonstrates efficiency and long-term value.

- Quantify Current Costs: Calculate the current fully burdened cost of your manual drug analysis process (analyst time, consumables, instrument maintenance).

- Project Efficiency Gains: Use data from your vendor evaluation and any pilot studies to project time savings (e.g., "The system processes 3x the samples per analyst shift").

- Calculate ROI: Model the total cost of ownership (purchase, installation, training, annual service contracts) against the projected efficiency savings and the value of reducing the controlled substances backlog.

- Highlight Strategic Benefits: Emphasize non-financial benefits, such as reduced analyst fatigue, improved data traceability, and the ability to re-allocate skilled staff to more complex tasks.

Q3: A common challenge in adopting new technology is staff resistance. How can we structure the evaluation phase to foster buy-in from our scientists and analysts?

- A3: Involve end-users from the very beginning.

- Form a User Evaluation Group: Include analysts of varying experience levels in the testing and protocol development for the new technology.

- Encourage Hands-On Testing: Allow them to run the equipment during the evaluation phase and provide structured feedback on the user interface and workflow.

- Address Concerns Proactively: Use feedback forms and meetings to identify and document specific concerns about the new process, and work with the vendor to address them.

- Empower Champions: Identify early adopters who are enthusiastic about the technology and can act as peer trainers and advocates during the full rollout.

In an era defined by both technological promise and fiscal constraint, a methodical approach to technology assessment is not merely beneficial—it is essential for the modern forensic laboratory. By adopting this structured framework, from initial needs assessment through rigorous experimental validation and final financial justification, labs can make defensible, data-driven decisions. This ensures that every investment directly supports the core mission: to deliver timely, reliable, and accurate scientific evidence in the pursuit of justice.

Leveraging Agile and Project Management Frameworks for Flexible Resource Allocation

Technical Support Center: FAQs & Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q1: How can Agile principles be applied to the resource-constrained environment of a crime lab or research setting?

Agile resource management emphasizes flexible allocation and continuous reassessment of resources based on changing project needs, which is ideal for dynamic environments like labs [33]. This involves:

- Dynamic Reallocation: Regularly reviewing project tasks and reallocating personnel with the right skills to where they are most needed [33].

- Prioritized Backlog: Maintaining a prioritized backlog of work items (e.g., cases, experiments) allows a self-managing team to pull the most critical tasks first [34]. This ensures that high-value work is resourced promptly [33].

- Cross-Functional Teams: Creating interdisciplinary teams aids in better workload distribution and reduces dependency on specific, often scarce, individuals [33].

Q2: What are the most common resource allocation bottlenecks when implementing a new technology, and how can we overcome them?

The table below summarizes common bottlenecks and their mitigation strategies.

| Bottleneck | Description | Mitigation Strategy |

|---|---|---|

| Resource Availability | Moving key personnel with specialized skills (e.g., a digital forensics expert) can create conflicts and delays in their original projects [33]. | Maintain a skills inventory to quickly identify available talent and proactively communicate changing priorities [33]. |

| Unbalanced Workload | Losing sight of individual workloads while reallocating staff leads to burnout for some and underutilization of others [33]. | Use resource leveling to adjust schedules based on available capacity, creating a sustainable workflow [35]. |

| Resistance to Cultural Shift | Moving from a traditional "plan and execute" model to a flexible "adjust on the go" Agile model can cause resistance [33]. | Foster a culture of continuous improvement with regular retrospectives and lead with empathy to engage team members emotionally [36] [33]. |

Q3: Our work requires strict compliance and documentation. Is Agile compatible with a regulated environment?

Yes, but it requires a tailored approach. The Agile mindset of iterative progress and adaptability can be overlaid onto pre-existing compliance structures [37]. You must ensure that all regulatory and documentation requirements are followed as usual, using the Agile framework to make your teams more efficient within those fixed constraints [37]. Methodologies like Stage-Gate can be combined with Agile, using the gates for rigorous compliance reviews before a project progresses [38].

Q4: What KPIs should we use to measure the success of our resource allocation strategy?

Success should be measured by outcome-based metrics, not just velocity. In an Agile context, the team should collaboratively define the "definition of done" and the KPIs that indicate progress [37]. These can include:

- Throughput: Increased number of cases or experiments completed within a sprint [34].

- On-Time Delivery: Improved adherence to project timelines and milestones [39].

- Team Engagement: Measured through surveys and feedback, as greater accountability and engagement are key benefits of effective Agile resource management [33].

Troubleshooting Common Experimental Issues

Issue 1: Sprint Progress Has Stalled on a Key Experiment

- Problem: A critical experimental task is stuck in the "In Progress" column on your Kanban board for too long.

- Solution:

- Visualize the Blockage: Use a physical or digital Kanban board to make the stalled task visible to the entire team [37].

- Discuss in Daily Stand-up: Use the daily stand-up meeting to ask: "What is blocking the progress of this task?" and "How can the team help overcome this?" [37].

- Identify Dependencies: The blockage often occurs because the task is dependent on another task that hasn't been completed or a resource that isn't available. The team can then collaboratively focus on unblocking that dependency [37].

Issue 2: Constant Scope Changes from Stakeakers Are Derailing Resource Plans

- Problem: A stakeholder frequently requests changes, causing constant context-switching for researchers and breaking the team's workflow.

- Solution:

- Involve Stakeholders: Maintain regular and transparent communication with stakeholders about the project's progress and the realistic effort required for new requests [37] [36].

- Use a Prioritized Backlog: Instead of immediately acting on new requests, add them to a prioritized product backlog. During the next sprint planning meeting, the team can pull the highest-priority items (including new requests) into the upcoming sprint, ensuring that the most valuable work is always being resourced without disrupting the current sprint's goal [40] [34].

- Communicate with Story Points: Use relative estimation (e.g., story points) to help stakeholders understand the true weight and complexity of a new request, fostering a more realistic understanding of timelines [37].

Issue 3: Overloaded Specialists Causing Delays

- Problem: A few key specialists (e.g., a statistician or a specific instrumentation expert) are over-allocated across multiple projects, creating a bottleneck.

- Solution:

- Apply Critical Chain Method (CCM): Use CCM to map task dependencies based on actual resource availability, not just ideal task order. This method introduces buffers to protect the project schedule from disruptions caused by shared, overloaded resources [35].

- Implement Resource Smoothing: If the project deadline is fixed, use resource smoothing to redistribute tasks within the existing schedule's slack to avoid spikes in the specialist's workload [35].

- Integrate Learning & Development: Proactively identify these skill gaps and encourage cross-training or continuous learning for other team members to build secondary support for these specialized skills [33].

Experimental Protocols & Methodologies

Protocol 1: Implementing a Scrum Framework for a Forensic Casework Backlog

This protocol outlines the steps to manage a backlog of forensic cases using Scrum.

- Create a Prioritized Product Backlog: Compile all active cases into a single, prioritized list (the Product Backlog). The prioritization should be based on factors such as judicial urgency, severity of the crime, and potential for quick resolution [34].

- Sprint Planning: Select the top-priority cases from the backlog that the team can reasonably commit to during a fixed-length sprint (e.g., 2-4 weeks). This selection becomes the Sprint Backlog [40] [34].

- Sprint Execution & Daily Stand-ups: The team works on the committed cases. Each day, hold a 15-minute stand-up meeting where each investigator answers: What did I do yesterday? What will I do today? Are there any impediments? [40]

- Sprint Review & Retrospective: At the end of the sprint, review the completed cases with stakeholders. Subsequently, hold a retrospective to discuss what went well, what didn't, and how to improve the process for the next sprint [40].

Protocol 2: Kanban for Continuous Digital Forensics Triage

This protocol is for managing a continuous inflow of digital evidence, focusing on workflow visualization and limiting work-in-progress.

- Visualize the Workflow: Create a Kanban board with columns such as "Backlog," "Triage/Acquisition," "Analysis," "Reporting," and "Done." [40]

- Set Work-in-Progress (WIP) Limits: Assign a maximum number of items that can be in any given column (especially "Analysis") at one time. This prevents overloading analysts and exposes bottlenecks [40].

- Pull, Don't Push: As an analyst finishes an item and moves it to "Reporting," they pull the next highest-priority item from the "Triage/Acquisition" column into their "Analysis" column. This ensures a smooth, pull-based workflow [40].

- Feedback and Iteration: Maintain close collaboration with end-users (investigators). Use their continuous feedback to make small, iterative improvements to the triage and analysis tools, following Agile development principles [41].

Workflow Visualization

Agile Scrum Sprint Cycle for Lab Research

Resource Allocation Method Decision Guide

The Scientist's Toolkit: Research Reagent Solutions

The following table details key "reagents" – in this context, the essential project management tools and materials – needed for implementing flexible resource allocation frameworks.

| Item | Function & Application |

|---|---|

| Prioritized Backlog | A dynamic list of all work items (cases, experiments) ordered by importance. Serves as the single source of truth for what to work on next, ensuring resources are allocated to the highest-value tasks [40] [34]. |

| Skills Inventory | A living database (e.g., a spreadsheet or integrated software feature) tracking team members' skills, availability, and professional interests. Enables rapid identification and reassignment of the right human resources to emerging tasks [33]. |

| Kanban/Scrum Board | A visual tool (physical or digital) to display work items as they flow through process stages. Provides transparency, reveals bottlenecks, and helps balance workloads by limiting work-in-progress [40] [37]. |

| Sprint Timer | A time-boxing mechanism (e.g., a 2-week calendar cycle). Creates a rhythm for planning, execution, and feedback, forcing regular reassessment of priorities and resource allocation [40] [42]. |

| Retrospective Template | A structured format for conducting sprint retrospectives. Facilitates continuous improvement by allowing the team to reflect on what worked, what didn't, and how to optimize processes and resource usage moving forward [40] [36]. |

Technical Support Center: FAQs & Troubleshooting Guides

Frequently Asked Questions (FAQs)

Q: What is the primary official website for finding federal grant opportunities? A: The primary website for discovering federal grant opportunities is Grants.gov [43]. This is the central hub where federal agencies post funding opportunities for organizations and entities supporting government-funded programs and projects.

Q: Who is eligible to apply for federal grants listed on Grants.gov? A: Federal funding opportunities on Grants.gov are intended for organizations and entities, not individuals seeking personal financial assistance [43]. This includes organizations supporting the development and management of government-funded programs and projects. Determining your organization's eligibility is a critical first step before applying.